From 16 Seconds to 1.3: A Journey Through Performance Optimization - Part 1

- Stefano

- Programming

- 21 Oct, 2025

Recently, I decided to learn Rust - not just for career pivot reasons, but to understand if modern language design could actually deliver on the “zero-cost abstractions” promise.

My test? Build a market data aggregator that processes 10 million trades and computes OHLCV (Open/High/Low/Close/Volume) bars. First in C++, optimizing ruthlessly. Then in Rust, comparing performance.

What I discovered surprised me. But let’s start at the beginning.

The C++ Optimization Journey

Phase 1: The Naive Implementation (16.5 seconds on my linux machine)

I started with straightforward, functional-style C++ code:

// Read CSV line by linestd::ifstream file(filename);std::string line;std::map<uint64_t, std::vector<Trade>> windows;

while (std::getline(file, line)) { auto trade = parse_trade(line); // Using std::stod, std::stoull auto window = to_window(trade.timestamp_ms); windows[window].push_back(trade);}

// Aggregate using functional patternsstd::vector<OHLCV> bars;std::transform(windows.begin(), windows.end(), std::back_inserter(bars), [](const auto& pair) { return aggregate_trades(pair.first, pair.second); });Result: 16,529ms to process 10M trades

This is slow. But why? If you create a flamegraph you can see where the time is spent but that doesn’t give you a direction to start moving in the right direction. Below in the interactive graph you can see that we spend a lot of time in the push_back function of the std::vector class but that time is well spent?

Phase 1 Flamegraph - Click sections to zoom, search with Ctrl+F

Understanding the Bottleneck: The Three Types of “Bound”

Before optimizing, I needed to understand what was actually slow. There are three main bottleneck categories:

1. I/O Bound - Waiting for disk/network

- Symptoms: Low CPU usage, high context switches

- Common in: File readers, network services

- Solution: Async I/O, buffering, caching

2. Memory Bound - Waiting for RAM

- Symptoms: High cache miss rate, normal CPU usage

- Common in: Random access patterns, pointer chasing

- Solution: Better data structures, prefetching, cache-friendly layouts

3. CPU Bound - Waiting for computation

- Symptoms: 100% CPU, low cache misses

- Common in: Heavy computation, string parsing

- Solution: Better algorithms, SIMD, parallel processing

Investigation: What’s Actually Slow?

My initial hypothesis: “It’s reading a file, so it must be I/O bound.”

Let’s test with perf stat:

perf stat ./trade_aggregator trades.csv16,665.35 msec task-clock # 1.000 CPUs utilized 643 context-switches # 38.583 /sec 132,344 page-faults # 7.941 K/secSurprise #1: It’s NOT I/O bound!

- 100% CPU utilization (not waiting on I/O)

- Low context switches (not blocking)

- High page faults (lots of allocations)

Next hypothesis: “Is it memory-bound or compute-bound?” Let’s run perf to get stats about the stuff is interesting to know when investigating memory-bound processes.

perf stat -e cycles,instructions,cache-misses,cache-references ./trade_aggregator trades.csvcache-misses: 29,597,938cache-references: 108,614,903→ 27.25% cache miss rate ← MEMORY BOUND!The smoking gun: 27% of cache accesses miss!

Cache miss interpretation:

- < 5% = CPU doing work (compute-bound)

- 10-20% = Moderate memory pressure

- 27% = Significantly memory-bound ← We’re here

- bigger than 30% = severe bottleneck

But which functions cause the misses?

perf record -e cache-misses -g ./trade_aggregator trades.csvperf report26% - std::vector::push_back31% - std::map operations (tree traversal)Root cause identified:

std::map<uint64_t, vector<Trade>>= scattered memory- Each window’s vector allocated separately

- Tree rebalancing causes cache evictions

- Random access pattern = cache thrashing

Phase 2: Memory Optimization (6.5 seconds - 2.5x faster!)

Armed with knowledge, I made targeted changes:

// 1. Hash table instead of tree (O(1) vs O(log n))std::unordered_map<uint64_t, std::vector<Trade>> windows;

// 2. Pre-allocate to avoid reallocationswindows.reserve(200); // Avoid rehashing

// 3. Reserve vector capacityauto& window_trades = windows[window];if (window_trades.empty()) { window_trades.reserve(100000); // Avoid push_back reallocations}

// 4. Single-pass aggregation (instead of multiple passes)for (const auto& trade : trades) { if (trade.price < min_price) min_price = trade.price; if (trade.price > max_price) max_price = trade.price; total_volume += trade.volume;}Results:

Time: 16,529ms → 6,508ms (2.5x faster)Instructions: 139B → 58B (2.4x fewer!)Cache misses: 38.9M → 18.6M (2x fewer)Key lesson: Data structure choice matters more than micro-optimizations.

Phase 3: Eliminate Parsing Overhead (1.8 seconds - 3.7x faster!)

The next bottleneck? String parsing with std::stod and std::stoull.

Two optimizations:

1. Memory-mapped I/O (instead of getline())

// Map entire file into memorychar* file_data = mmap(nullptr, file_size, PROT_READ, MAP_PRIVATE, fd, 0);madvise(file_data, file_size, MADV_SEQUENTIAL);

// Parse directly from mapped buffer (zero-copy)const char* ptr = file_data;while (ptr < end) { parse_trade_mmap(ptr, trade);}2. Custom parsing (5x faster than std::stod)

inline double parse_double(const char*& ptr) { double result = 0.0; // Parse integer part while (*ptr >= '0' && *ptr <= '9') { result = result * 10.0 + (*ptr - '0'); ++ptr; } // Parse decimal if (*ptr == '.') { ++ptr; double fraction = 0.1; while (*ptr >= '0' && *ptr <= '9') { result += (*ptr - '0') * fraction; fraction *= 0.1; ++ptr; } } return result;}Results:

Time: 6,508ms → 1,777ms (3.7x faster!)Instructions: 58B → 16.5B (3.5x fewer!)IPC: 1.97 → 2.03 (better CPU utilization)Total improvement so far: 16.5s → 1.8s (9.3x speedup!)

Phase 4: SIMD Vectorization (1.7 seconds - 1.05x faster)

Final optimization: use AVX2 to process 4 doubles at once.

#include <immintrin.h>

OHLCV aggregate_trades_simd(uint64_t window, const std::vector<Trade>& trades) { __m256d vec_min = _mm256_set1_pd(trades[0].price); __m256d vec_max = _mm256_set1_pd(trades[0].price); __m256d vec_sum = _mm256_setzero_pd();

// Process 4 trades at once for (size_t i = 0; i + 3 < n; i += 4) { __m256d prices = _mm256_set_pd( trades[i+3].price, trades[i+2].price, trades[i+1].price, trades[i].price ); vec_min = _mm256_min_pd(vec_min, prices); vec_max = _mm256_max_pd(vec_max, prices); // ... accumulate volumes } // Horizontal reduction + handle remaining elements}Results:

Time: 1,777ms → 1,691ms (5% improvement)IPC: 2.03 → 2.10 (better instruction throughput)Why only 5%?

Amdahl’s Law: Aggregation is only ~10% of total time. Even 4x SIMD speedup on 10% = ~1.03x overall.

Most time is still in parsing (>90%), which is harder to vectorize.

The lesson: Optimize the bottleneck first. SIMD is powerful but can’t overcome architectural issues.

The Cache Miss Paradox

One counterintuitive finding throughout this journey:

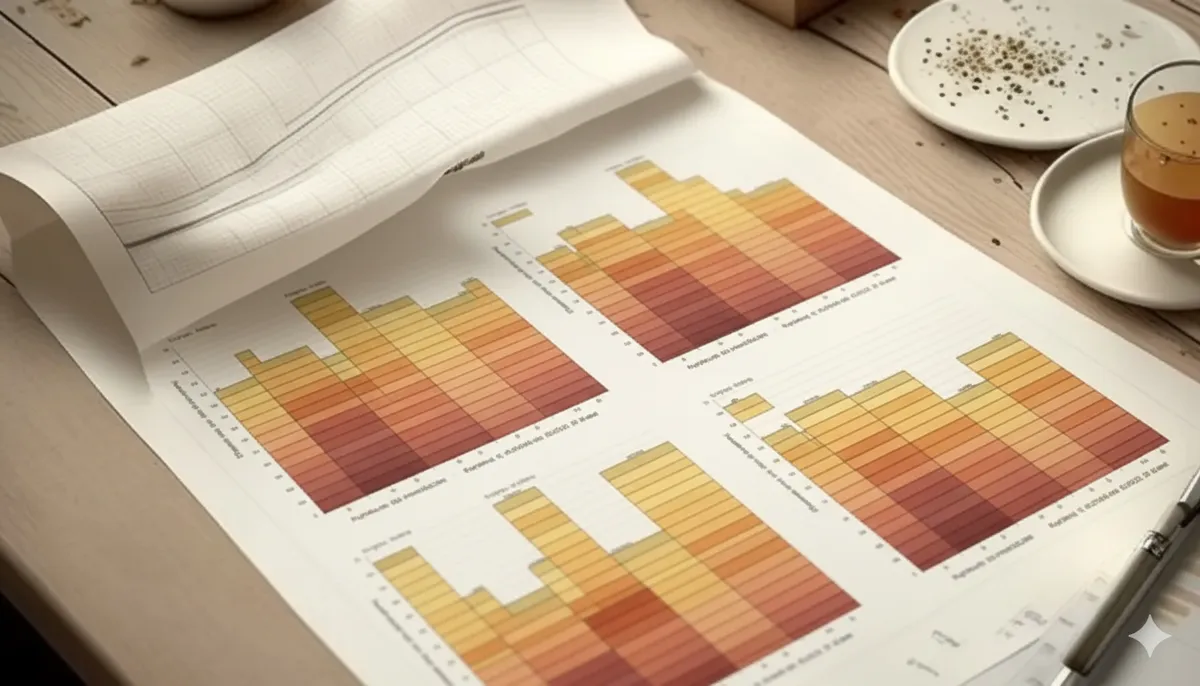

| Phase | Cache Miss Rate | Time |

|---|---|---|

| Phase 1 | 29% | 16.5s |

| Phase 2 | 39% | 6.5s |

| Phase 4 | 63% | 1.7s (fastest!) |

How can worse cache miss rate = better performance?

Because we’re doing fundamentally less work:

- Phase 1: 139B instructions

- Phase 4: 16.1B instructions (8.6x fewer!)

The insight: Absolute time spent on cache misses matters, not the percentage.

Even with higher miss rates, Phase 4 accesses memory 3.6x less often:

- Phase 1: 110M cache references

- Phase 4: 31M cache references

Better to miss 63% on 31M accesses than 29% on 110M accesses.

Final C++ Stats

Phase 1 → Phase 4: 16,529ms → 1,691ms (9.8x speedup)

Breakdown:├─ Phase 2 (Memory): 2.5x (unordered_map, reserves)├─ Phase 3 (Parsing): 3.7x (mmap, custom parsing)└─ Phase 4 (SIMD): 1.05x (vectorization)

Performance counters (Phase 4):- Cycles: 7.9B- Instructions: 16.1B- IPC: 2.05- Cache miss rate: 63%I was satisfied. 10M trades in 1.7 seconds felt good.

Then I ported it to Rust.