CLion with multiple CUDA SDKs

- Stefano

- Programming , Data science

- 02 Dec, 2023

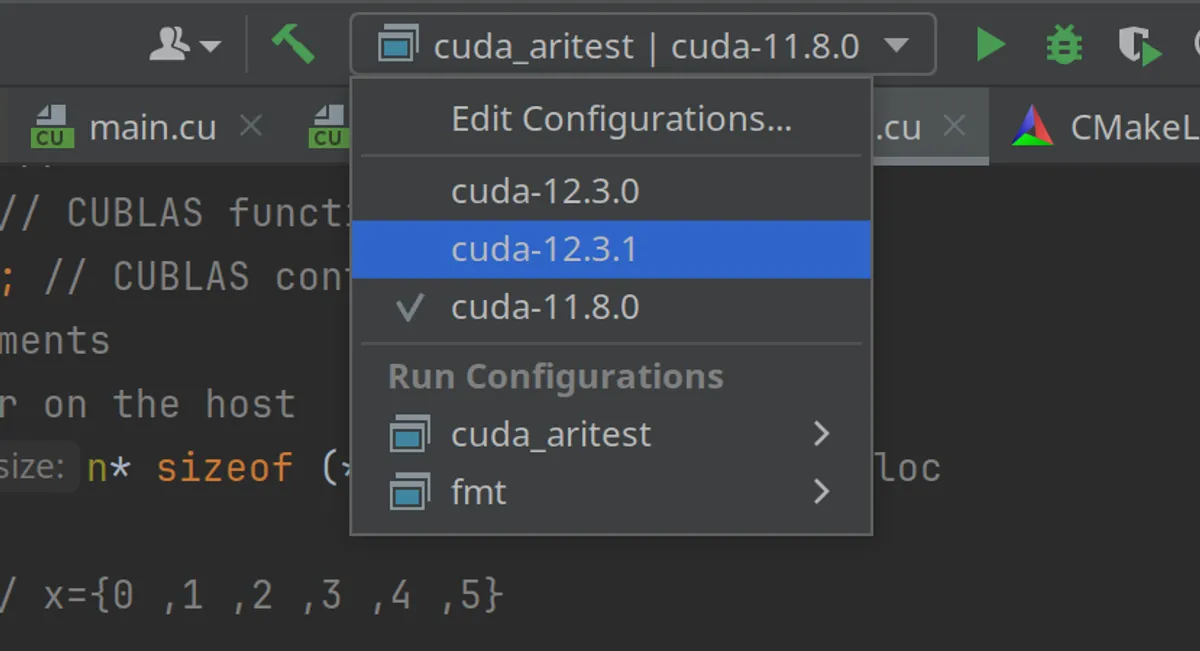

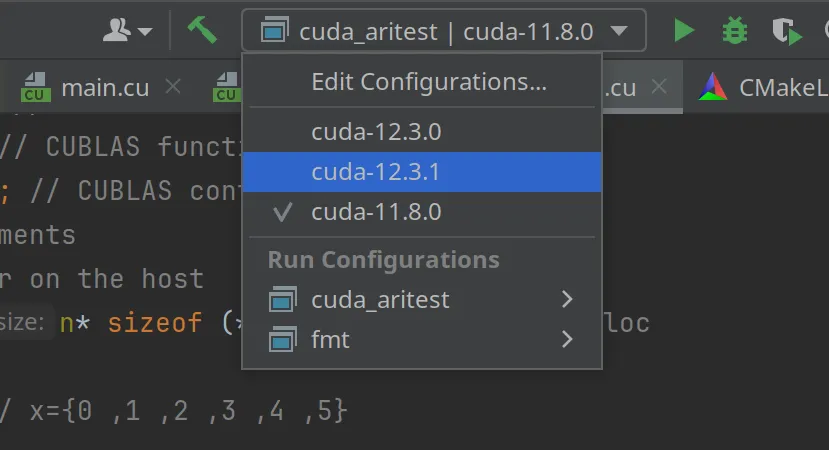

There are several ways to set up multiple toolchains with different CUDA versions when using CLion. The easiest way I found was to use micromamba, a lightweight (mini|ana)conda variant, and then use the environment script to configure the toolchains in CLion. The idea is to be able to quickly switch from one sdk to the other, like the following

Micromamba (conda) environments

You can install micromamba by following the instructions here

ice@kube:~$ "${SHELL}" <(curl -L micro.mamba.pm/install.sh) % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0100 3069 100 3069 0 0 7525 0 --:--:-- --:--:-- --:--:-- 149kMicromamba binary folder? [~/.local/bin] yInit shell (bash)? [Y/n] YConfigure conda-forge? [Y/n] YPrefix location? [~/micromamba]Modifying RC file "/home/ice/.bashrc"Generating config for root prefix "/home/ice/micromamba"Setting mamba executable to: "/home/ice/y/micromamba"Adding (or replacing) the following in your "/home/ice/.bashrc" file

# >>> mamba initialize >>># !! Contents within this block are managed by 'mamba init' !!export MAMBA_EXE='/home/ice/y/micromamba';export MAMBA_ROOT_PREFIX='/home/ice/micromamba';__mamba_setup="$("$MAMBA_EXE" shell hook --shell bash --root-prefix "$MAMBA_ROOT_PREFIX" 2> /dev/null)"if [ $? -eq 0 ]; then eval "$__mamba_setup"else alias micromamba="$MAMBA_EXE" # Fallback on help from mamba activatefiunset __mamba_setup# <<< mamba initialize <<<

Please restart your shell to activate micromamba or run the following:\n source ~/.bashrc (or ~/.zshrc, ~/.xonshrc, ~/.config/fish/config.fish, ...)Once installed, please take note of the code between

>>> mamba initialize >>>....<<< mamba initialize <<<It will be needed later when we create the environment script for the toolchains. Once you installed micromamba, you can start to install the CUDA sdks, like for example 11.8.0

# create and activate the envmicromamba create --name cuda-11.8.0micromamba activate cuda-11.8.0

# install the needed packages for cuda developmentmicromamba install -c "nvidia/label/cuda-11.8.0" cuda-libraries-dev cuda-nvcc cuda-cudart-static

# here I install gcc and g++ using a compatible versionmicromamba install gcc=11.4.0 gxx=11.4.0For 12.3.0 and 12.3.1

# create and activate the envmicromamba create --name cuda-12.3.0micromamba activate cuda-12.3.0

# install the needed packages for cuda developmentmicromamba install -c "nvidia/label/cuda-12.3.0" cuda-libraries-dev cuda-nvcc cuda-cudart-static

# install gcc and g++, packages coming from conda-forgemicromamba install gcc=12.3.0 gxx=12.3.0

# create and activate the envmicromamba create --name cuda-12.3.1micromamba activate cuda-12.3.1

# install the needed packages for cuda developmentmicromamba install -c "nvidia/label/cuda-12.3.1" cuda-libraries-dev cuda-nvcc cuda-cudart-static

# install gcc and g++, packages coming from conda-forgemicromamba install gcc=12.3.0 gxx=12.3.0Now you have the environments ready, with all the files installed in ~/micromamba/envs/… and not polluting the systems. So it is easy to get rid of them or update them.

CLion Toolchains

Now we need to create a shell script for each of the conda environments we created, to be used to configure the toolchain in CLion. One script could be called load_cuda_11.8.0.sh with the following content

# >>> mamba initialize >>># !! Contents within this block are managed by 'mamba init' !!export MAMBA_EXE='/home/ice/y/micromamba';export MAMBA_ROOT_PREFIX='/home/ice/micromamba';__mamba_setup="$("$MAMBA_EXE" shell hook --shell bash --root-prefix "$MAMBA_ROOT_PREFIX" 2> /dev/null)"if [ $? -eq 0 ]; then eval "$__mamba_setup"else alias micromamba="$MAMBA_EXE" # Fallback on help from mamba activatefiunset __mamba_setup# <<< mamba initialize <<<

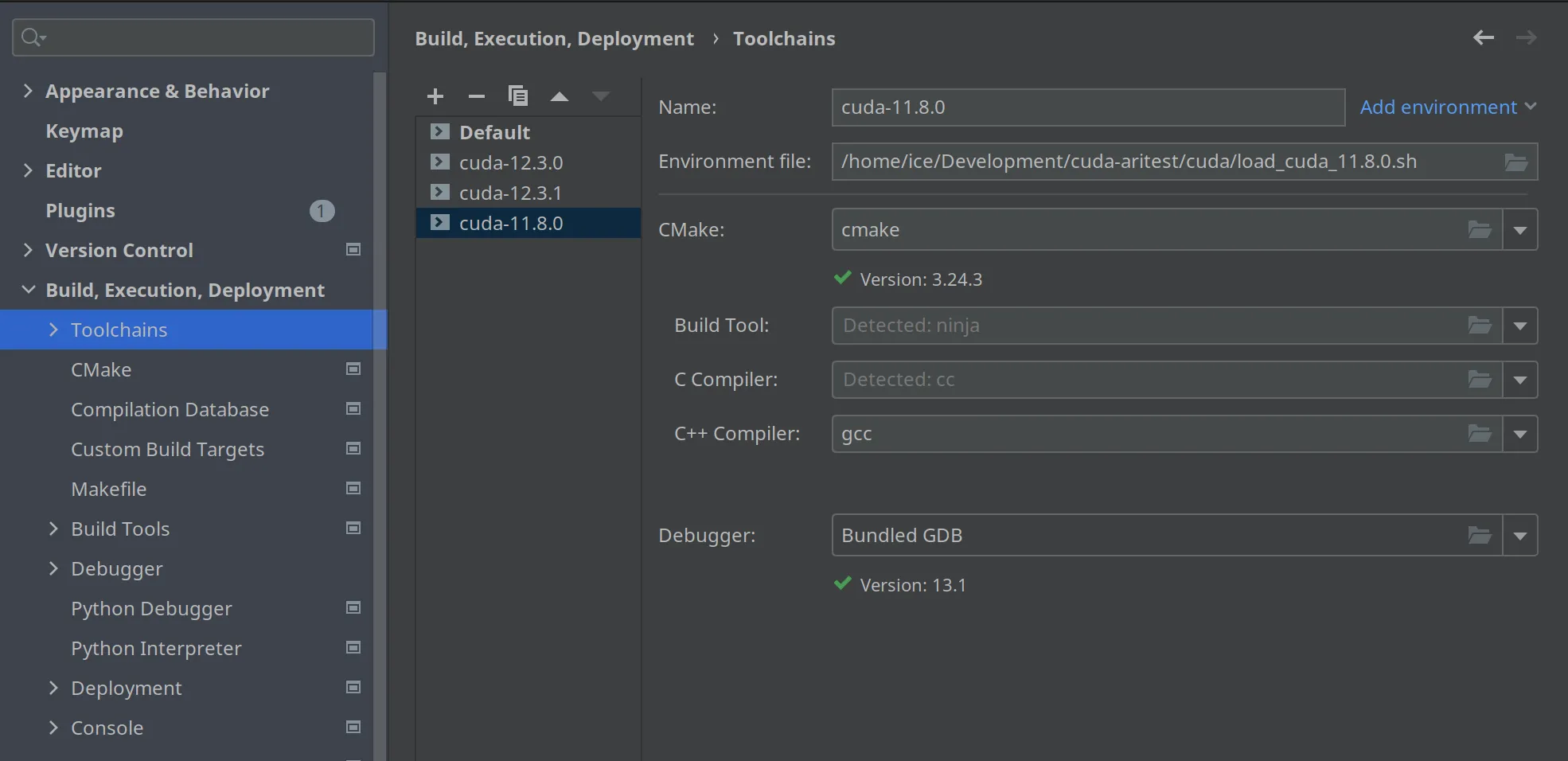

micromamba activate cuda-11.8.0Now we can add a toolchain in CLion, by using the above script as an environment file. Here I show my toolchains

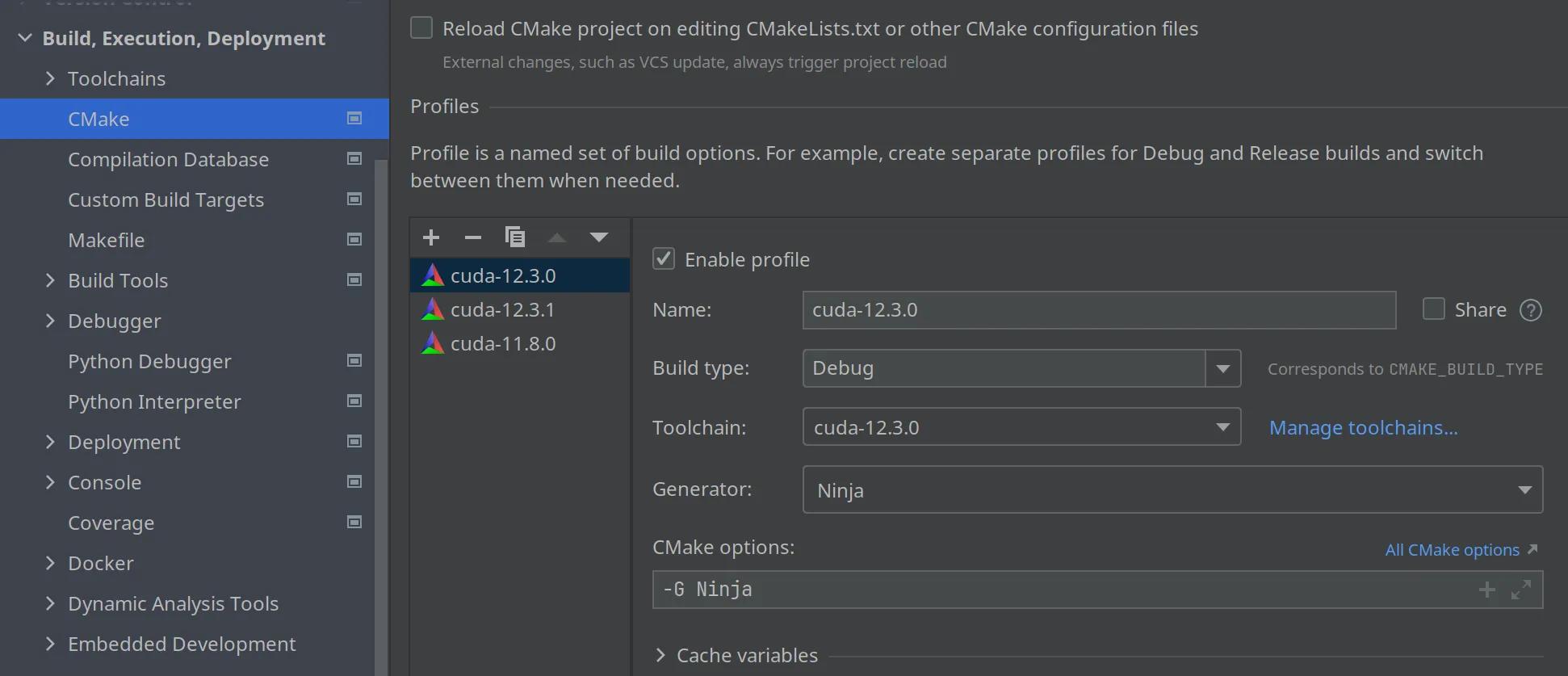

You add a toolchain for every environment you want to use. Once you defined the toolchains, you need to create a Debug|Release configuration based on each toolchain, like here

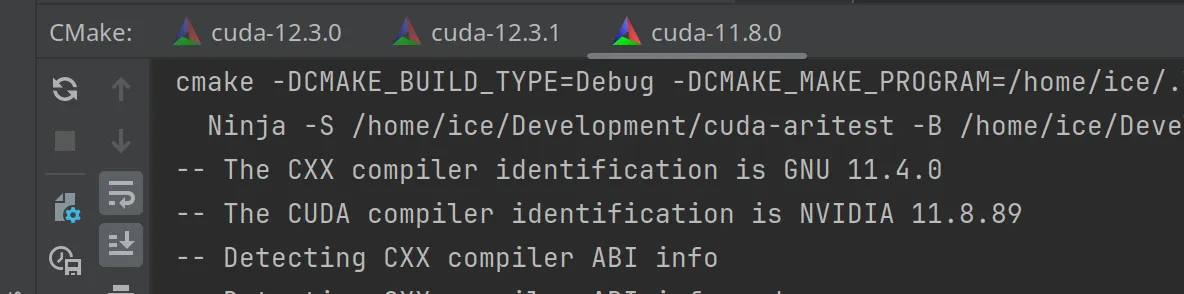

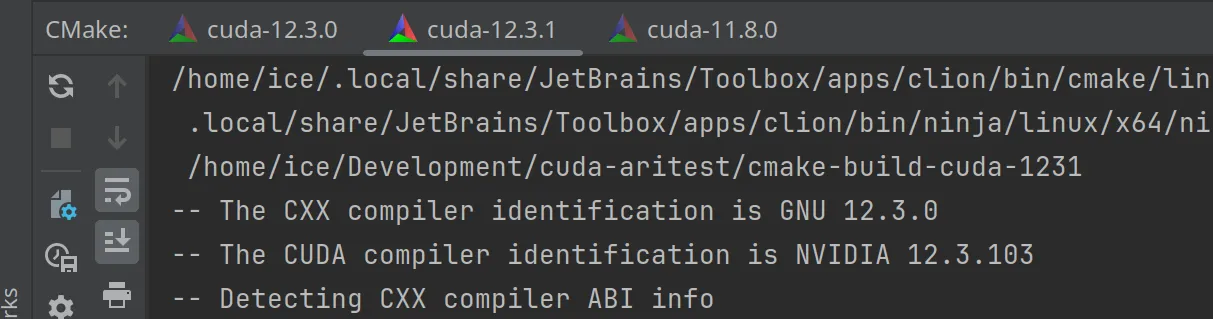

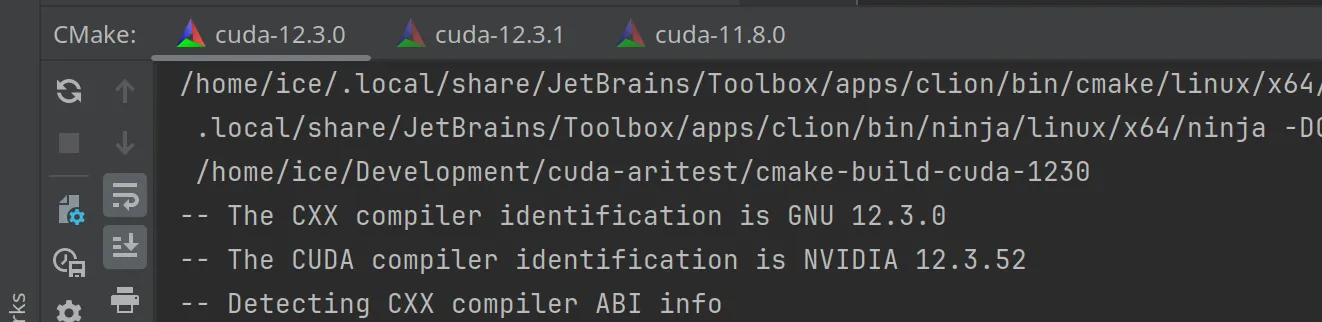

Now when you set up your project, three configurations will be used, as I can show with these three screenshots

Troubleshooting

The amount of things that can go wrong is huge :-) For example, for the cuda sdk 11.8.0, I found that the libcudadevrt.a from the conda channel was not the correct one (at least for the version of the Nvidia drivers I had) so I had to take that library from the Nvidia sdk distribution and copy to my environment, like this

cp nvidia-cuda-11.8/libcudadevrt.a /home/ice/micromamba/envs/cuda-11.8.0/libThe nice thing is that I am not messing with my system libraries, just with a local conda environment.

Well, I hope it helps.