From 5 Seconds to 480ms: A Performance Optimization Journey

- Stefano

- Programming

- 25 Oct, 2025

Act 1: The C++ Journey - Learning Through Measurement

The goal was simple: read a CSV file containing 10 million trades, aggregate them into OHLCV (Open/High/Low/Close/Volume) bars by time window, and do it as fast as possible.

This is the story of how I optimized the same problem in both C++ and Rust. It’s a journey of failures and successes that demonstrates why the process of discovery often matters more than the final destination.

Phase 1: The Naive Implementation (4975ms) - “This Should Be Fast!”

I started with what felt natural: clean, semi-functional C++ using standard library components. After all, C++ is supposed to be fast, right?

// Phase 1: Functional style with iostreamsstd::optional<Trade> parse_trade(const std::string& line) { std::istringstream iss(line); std::string token; std::vector<std::string> tokens;

while (std::getline(iss, token, ',')) { tokens.push_back(token); }

if (tokens.size() != 3) return std::nullopt;

try { return Trade{ std::stoull(tokens[0]), std::stod(tokens[1]), std::stod(tokens[2]) }; } catch (...) { return std::nullopt; }}

// Aggregate using rangesOHLCV aggregate_trades(uint64_t window, const std::vector<Trade>& trades) { auto prices = trades | std::views::transform([](const Trade& t) { return t.price; });

auto total_volume = std::accumulate( trades.begin(), trades.end(), 0.0, [](double sum, const Trade& t) { return sum + t.volume; } );

return OHLCV{ window, trades.front().price, *std::ranges::max_element(prices), *std::ranges::min_element(prices), trades.back().price, total_volume };}Result: 4975ms

Not terrible, but far from great. It was time to profile.

I ran perf record and the results didn’t surprise me:

$ perf stat ./trade_aggregator trades.csvProcessed 101 bars in 4835ms

Performance counter stats: 4,849.75 msec task-clock # 1.000 CPUs utilized 37 context-switches # Very low, indicating it's CPU-bound 49,556,918,037 instructions # 2.26 insn per cyclePhase 2: The Obvious Fix That Wasn’t (5025ms) - “Hash Tables Are Faster!”

Everyone knows hash tables offer O(1) average-case insertion versus O(log n) for tree-based maps. I confidently swapped std::map for std::unordered_map:

// Surely this will be faster!std::unordered_map<uint64_t, std::vector<Trade>> windows;Result: 5025ms

Worse. Actually, slightly worse. Why did this fail?

With only 101 distinct time windows:

std::map: O(log 101) ≈ 7 comparisons per insertion.std::unordered_map: Involves hash computation, potential collisions, and occasional rehashing.

The overhead of the hashing function, combined with the fact that the output still needed to be sorted by timestamp, cost more than the simple tree operations.

💡 Lesson 1: Context matters. With a small number of keys, “obvious” algorithmic optimizations can backfire.

A great example of “asymptotic complexity” vs. “real-world performance.” The constant factors in hashing and memory access for the

unordered_mapoutweighed the logarithmic complexity ofstd::mapfor this specific dataset size.

I should have trusted the profiler from the start:

parse_trade: 80% of runtime ├─ strtod: 27.8% ├─ getline (internal): 8.78% ├─ istringstream::init: 6.56% ← Creating stream objects is expensive! ├─ operator new: 6.13% ← Constant memory allocations └─ strtoull: 3.75%Following an itch instead of data is a classic performance-tuning mistake. The profiler is your most honest friend.

Phase 3: The Real Win (2586ms) - “Attack the Bottleneck”

The flamegraph was clear: 80% of the runtime was spent parsing, with istringstream creation alone consuming 6.56%. It was time to eliminate that overhead.

// Custom parser - no istringstream, direct pointer arithmeticstd::optional<Trade> parse_trade(const std::string& line) { const char* ptr = line.c_str(); char* end;

// Parse timestamp uint64_t timestamp = std::strtoull(ptr, &end, 10); if (*end != ',') return std::nullopt;

// Parse price ptr = end + 1; double price = std::strtod(ptr, &end); if (*end != ',') return std::nullopt;

// Parse volume ptr = end + 1; double volume = std::strtod(ptr, &end);

return Trade{timestamp, price, volume};}Result: 2586ms. A 1.92x speedup!

$ perf stat ./trade_aggregator_phase3 trades.csvProcessed 101 bars in 2586ms

Performance counter stats: 11,733,144,303 cycles # Half the cycles! 24,917,028,826 instructions # Half the instructions! 2.12 insn per cycleThe new flamegraph confirmed the win:

main: 93.7% ├─ strtod: 53.39% ← Now the dominant cost ├─ getline: 9.05% └─ other: ~31%By removing the istringstream overhead, we halved the cycle and instruction counts. Now, strtod (the standard C function for parsing doubles) was the clear bottleneck.

💡 Lesson 2: Profile, identify the true bottleneck, and attack it relentlessly.

Phase 4: The SIMD Temptation (2614ms) - “But AVX2 Should Help!”

Looking at aggregate_trades, I saw an opportunity: finding min, max, and sum over thousands of prices per window. This seemed like a perfect use case for SIMD (Single Instruction, Multiple Data).

OHLCV aggregate_trades_simd(uint64_t window, const std::vector<Trade>& trades) { // ... setup code ...

__m256d vec_min = _mm256_set1_pd(trades[0].price); __m256d vec_max = _mm256_set1_pd(trades[0].price); __m256d vec_sum = _mm256_setzero_pd();

// Process 4 doubles at a time for (; i + 4 <= n; i += 4) { __m256d vec_prices = _mm256_loadu_pd(&price_data[i]); __m256d vec_vols = _mm256_loadu_pd(&volumes[i]);

vec_min = _mm256_min_pd(vec_min, vec_prices); vec_max = _mm256_max_pd(vec_max, vec_prices); vec_sum = _mm256_add_pd(vec_sum, vec_vols); }

// Horizontal reduction to get final values...}Result: 2614ms

No improvement. In fact, it was slightly worse in some runs.

$ perf stat ./trade_aggregator_phase4 trades.csvProcessed 101 bars in 2614ms

Performance counter stats: 11,738,495,430 cycles # Same as Phase 3 24,991,458,484 instructions # Slightly MORE instructionsWhy did SIMD fail?

The profiler told the same story: aggregation was never the bottleneck. Parsing still dominated the runtime at over 50%. The marginal gains from faster aggregation were completely lost in the noise. Worse, the overhead of copying data into contiguous arrays to prepare for SIMD operations cost more than what was saved.

💡 Lesson 3: Optimize the bottleneck, not just what seems “optimizable.” Clever optimizations in the wrong place are a waste of effort.

At this point, the C++ Phase 3 result of 2586ms felt like a practical limit. I had achieved a nearly 2x improvement through systematic, measurement-driven optimization. It was time to try a different approach.

Act 2: Enter Rust - The Surprise

Phase 1: Straightforward, Idiomatic Rust

I spent some time writing a direct, idiomatic Rust equivalent. No unsafe code, no clever tricks—just clean, standard Rust.

fn parse_trade(line: &str) -> Option<Trade> { let parts: Vec<&str> = line.split(',').collect();

if parts.len() != 3 { return None; }

let timestamp_ms = parts[0].parse::<u64>().ok()?; let price = parts[1].parse::<f64>().ok()?; let volume = parts[2].parse::<f64>().ok()?;

Some(Trade { timestamp_ms, price, volume, })}

fn aggregate_trades(window: u64, trades: &[Trade]) -> OHLCV { let open = trades.first().unwrap().price; let close = trades.last().unwrap().price;

let mut high = f64::NEG_INFINITY; let mut low = f64::INFINITY; let mut total_volume = 0.0;

for trade in trades { high = high.max(trade.price); low = low.min(trade.price); total_volume += trade.volume; }

OHLCV { window_start: window, open, high, low, close, volume: total_volume }}Note: Using

f64::NEG_INFINITYandf64::INFINITYis slightly more robust than seeding with the first element, as it handles empty slices gracefully (thoughunwrap()would panic here anyway).

Result: 1445ms

This was 1.79x faster than my optimized C++.

$ perf stat ./trade_aggregator_rust trades.csvProcessed 101 bars in 1445ms

Performance counter stats: 6,598,721,683 cycles # 44% fewer than C++! 17,171,967,642 instructions # 31% fewer than C++! 2.60 insn per cycle # Better IPC (Instructions Per Cycle)The Investigation: Why Was Rust So Much Faster Out of the Box?

The flamegraph revealed the secret:

main: 84% ├─ core::num::dec2flt::from_str: 14.93% ← Float parsing! ├─ malloc: 4.79% └─ other: ~64%Compare this to the C++ version:

- C++ Phase 3: 53% of runtime in

strtod. - Rust Phase 1: 15% of runtime in its float parser.

Rust’s standard library float parser was significantly more efficient than the glibc strtod implementation used by my C++ compiler. Same fundamental operation, but a vastly different level of performance in their standard library implementations.

💡 Lesson 4: The quality of a language’s standard library matters. Sometimes, a better “default” beats manual optimization effort.

Phases 2-4: Minor Rust Optimizations (1253ms)

I applied a few more improvements to the Rust version:

Phase 2: Better Allocation Strategy (1383ms) By pre-allocating memory for vectors and using a buffered reader, we reduce the number of system calls and reallocations.

// Reserve capacity, use a buffered readerlet mut windows: BTreeMap<u64, Vec<Trade>> = BTreeMap::new();let reader = BufReader::with_capacity(64 * 1024, file);windows.entry(window) .or_insert_with(|| Vec::with_capacity(100_000)) .push(trade);Phase 3: The fast-float Crate (1253ms)

Swapping the standard parser for a specialized, highly optimized library yielded another significant gain.

use fast_float::parse;

let price: f64 = parse(parts.next()?).ok()?;let volume: f64 = parse(parts.next()?).ok()?;Phase 4: The lexical Crate (1327ms)

Interestingly, another popular parsing crate was slightly slower in this specific benchmark.

The best configuration (Phase 3) achieved 1253ms, making it 2.1x faster than the optimized C++.

$ perf stat ./phase3 trades.csvProcessed 101 bars in 1253ms

Performance counter stats: 5,684,892,977 cycles 16,596,081,812 instructions 2.92 insn per cycle # Excellent IPC!At this point, I thought the story was over. “Rust wins because of better, safer defaults.” A nice, clean narrative. But I was wrong.

Act 3: Plot Twist - C++ Strikes Back

Phase 5: The Nuclear Option (490ms) - Going All In

What if I stopped being polite and optimized the C++ version without constraints?

The approach:

- Memory-mapped I/O (

mmap): Eliminate file I/O overhead by mapping the file directly into memory. - Custom Integer/Float Parsers: Write parsers that handle only the expected format, stripping all error handling, locale support, and edge cases.

- Aggressive Pre-allocation: Use an

unordered_mapagain, but this time with enough reserved capacity to prevent rehashing.

// Custom ultra-fast parsers - no error handling, assumes perfect formatinline uint64_t parse_uint64(const char*& ptr) { /* ... */ }inline double parse_double(const char*& ptr) { /* ... */ }

// Memory map the entire fileint fd = open(argv[1], O_RDONLY);struct stat sb;fstat(fd, &sb);char* file_data = static_cast<char*>( mmap(nullptr, sb.st_size, PROT_READ, MAP_PRIVATE, fd, 0));// Tell the kernel we will read this sequentiallymadvise(file_data, sb.st_size, MADV_SEQUENTIAL);

// Aggressive pre-allocationstd::unordered_map<uint64_t, std::vector<Trade>> windows;windows.reserve(500); // Prevent rehashing

// Parse directly from the memory mapconst char* ptr = file_data;while (ptr < end_ptr) { if (parse_trade_mmap(ptr, trade)) { auto& window_trades = windows[window]; if (window_trades.empty()) { window_trades.reserve(150000); // Avoid reallocations } window_trades.push_back(trade); }}Result: 490ms

This was 10.2x faster than the original C++ code and 5.4x faster than the previously optimized version.

$ hyperfine --warmup 3 --runs 10 './trade_aggregator_phase5 trades.csv'Time (mean ± σ): 490.2 ms ± 1.6 msRange (min … max): 488.6 ms … 493.6 msThe C++ comeback was complete. By stripping away every abstraction, safety check, and convenience, we achieved blistering performance. But at what cost? Look at that code. It’s brittle, platform-specific, and assumes a perfect input format. This is not maintainable code.

💡 Lesson 5: Extreme performance often requires extreme trade-offs in safety, portability, and maintainability.

Act 4: The Final Plot Twist - Rust’s Answer

Phase 5: Same Weapons, Better Results (480ms)

If C++ could go nuclear, so could Rust. I ported the exact same low-level approach.

use memmap2::Mmap;use std::collections::HashMap;

// Custom parsers matching C++ logic, operating on byte slices#[inline(always)]fn parse_u64(bytes: &[u8], start: &mut usize) -> Option<u64> { /* ... */ }

#[inline(always)]fn parse_f64(bytes: &[u8], start: &mut usize) -> Option<f64> { /* ... */ }

// Memory-map and parselet file = File::open(&args[1]).expect("Failed to open file");let mmap = unsafe { Mmap::map(&file).expect("Failed to mmap file") };

// Use libc crate to call madvise#[cfg(unix)]unsafe { libc::madvise( mmap.as_ptr() as *mut libc::c_void, mmap.len(), libc::MADV_SEQUENTIAL, );}

let mut windows: HashMap<u64, Vec<Trade>> = HashMap::with_capacity(500);

let bytes = &mmap[..];let mut pos = 0;

while pos < bytes.len() { if let Some(trade) = parse_trade_mmap(bytes, &mut pos) { let window = to_window(trade.timestamp_ms, 1000); windows.entry(window) .or_insert_with(|| Vec::with_capacity(150_000)) .push(trade); }}Result: 480ms

$ hyperfine --warmup 3 --runs 10 './phase5 trades.csv'Time (mean ± σ): 479.8 ms ± 3.7 msRange (min … max): 475.6 ms … 487.7 msRust wins by a margin of 2.1%.

When applying the same aggressive, low-level techniques, both languages perform almost identically, with Rust having a slight edge.

Act 5: The Real Lesson - Engineering Judgment

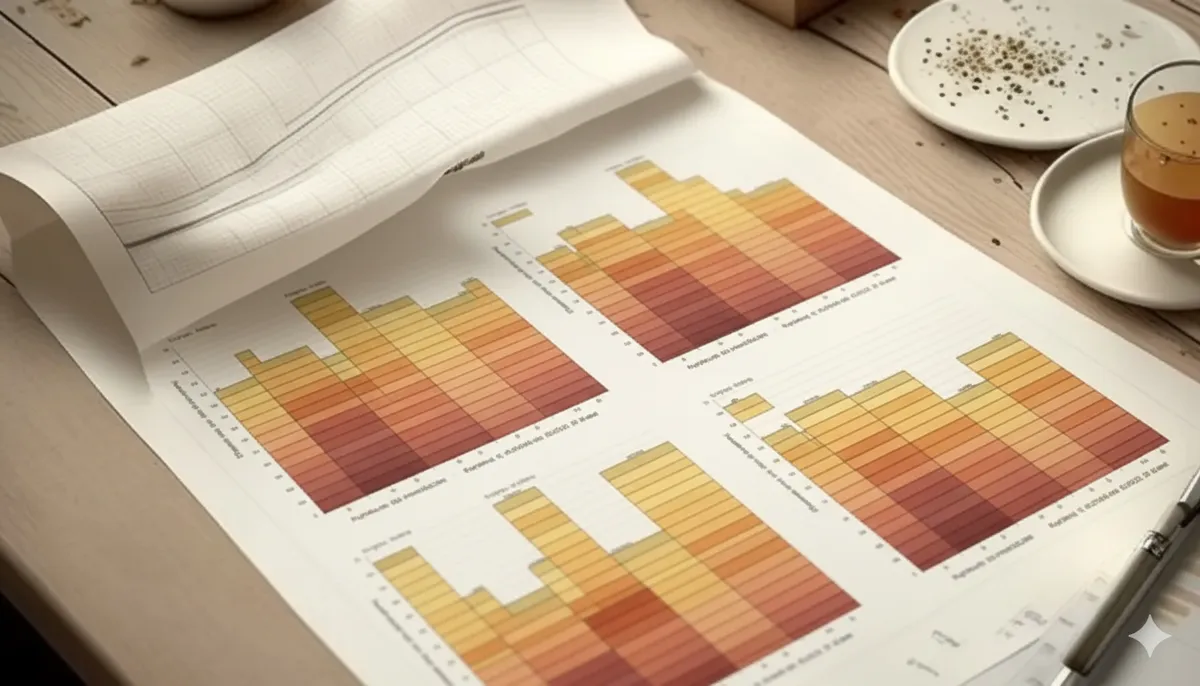

The Complete Journey

| Phase | Language | Time | Speedup (vs. C++ P1) | Code Quality |

|---|---|---|---|---|

| C++ Journey | ||||

| Phase 1: iostreams | C++ | 4975ms | 1.0x | ⭐⭐⭐⭐⭐ Maintainable |

| Phase 2: unordered_map | C++ | 5025ms | 0.99x | ⭐⭐⭐⭐⭐ Maintainable |

| Phase 3: custom parser | C++ | 2637ms | 1.89x | ⭐⭐⭐⭐ Good |

| Phase 4: SIMD | C++ | 2626ms | 1.90x | ⭐⭐⭐ Fair |

| Phase 5: mmap+custom | C++ | 490ms | 10.2x | ⭐ Fragile |

| Rust Journey | ||||

| Phase 1: idiomatic | Rust | 1445ms | 3.44x | ⭐⭐⭐⭐⭐ Excellent |

| Phase 2: optimized alloc | Rust | 1383ms | 3.60x | ⭐⭐⭐⭐⭐ Excellent |

| Phase 3: fast-float | Rust | 1253ms | 3.97x | ⭐⭐⭐⭐⭐ Excellent |

| Phase 4: lexical | Rust | 1327ms | 3.75x | ⭐⭐⭐⭐⭐ Excellent |

| Phase 5: mmap+custom | Rust | 480ms | 10.4x | ⭐⭐ Fragile (but safer) |

The code quality rating for Rust’s Phase 5 is slightly higher because even with

unsafe, the blast radius is more contained, and the rest of the language’s safety features still apply.

The Sweet Spot: Rust Phase 3 (1253ms)

Here’s the uncomfortable truth: the fastest code (Phase 5) is rarely the best code.

Why Phase 5 is problematic for most real-world applications:

- Limited Correctness: The custom parsers are extremely brittle. They don’t support scientific notation, proper infinity/NaN handling, or different locales, and would break on trivial format variations.

- Platform-Specific:

mmapandmadvisebehave differently across operating systems. - Maintenance Nightmare: Manual pointer manipulation (in C++) and

unsafeblocks (in Rust) are hard to reason about, easy to get wrong, and create security risks. - Marginal Real-World Benefit: The 770ms saved between Rust Phase 3 (1253ms) and Rust Phase 5 (480ms) would be completely dwarfed by network latency (1-50ms) or database queries (10-100ms) in a real system.

The Engineering Decision: Choose Rust Phase 3

// Uses the battle-tested and correct fast-float crateuse fast_float::parse;

fn parse_trade(line: &str) -> Option<Trade> { let mut parts = line.split(',');

let timestamp_ms = parts.next()?.parse::<u64>().ok()?; let price: f64 = parse(parts.next()?)?; let volume: f64 = parse(parts.next()?)?;

Some(Trade { timestamp_ms, price, volume })}Why this is the right choice for 99% of use cases:

- ✅ Fast Enough (1253ms): Still nearly 4x faster than the original C++ and 2x faster than the reasonably optimized C++.

- ✅ Production-Ready: It correctly handles edge cases and is cross-platform.

- ✅ Maintainable: The code is clear, concise, and relies on a well-tested library.

- ✅ Safe: It avoids

unsafeblocks and manual memory management. - ✅ Extendable: It’s easy to modify and build upon.

Conclusion

This was never truly a “Rust vs. C++” story. It’s a story about how systematic, measurement-driven engineering beats language dogma every time.

The Real Takeaways

- Measure, Don’t Assume: My initial assumptions about bottlenecks (

std::map, I/O) were all wrong. The profiler was the only source of truth. - “Best Practices” Are Context-Dependent: A tree-based map beat a hash table. SIMD was useless. The “best” tool always depends on the specific constraints of the problem.

- The Approach Matters More Than the Language: Both languages saw a ~10x speedup when the same aggressive, low-level techniques were applied. The final performance difference was negligible.

- Know When to Stop: The point of diminishing returns is real. The Phase 5 code offers ultimate performance but is fragile and hard to maintain. The “sweet spot” (Phase 3) provides excellent performance with production-ready code.

The Numbers That Tell the Story

C++ Journey:From: 4975ms (naive)To: 490ms (extreme)Sweet Spot: 2637ms (practical)

Rust Journey:From: 1445ms (idiomatic)To: 480ms (extreme)Sweet Spot: 1253ms (practical)

Final Recommendation: 1253ms (Rust Phase 3) - 2x faster than practical C++ - Production-ready and safe - Highly maintainable - The right engineering trade-offEpilogue: The Process

Profile, measure, identify the bottleneck, understand the trade-offs, and make an informed decision. That is the path to truly performant software.